The Maps of AI

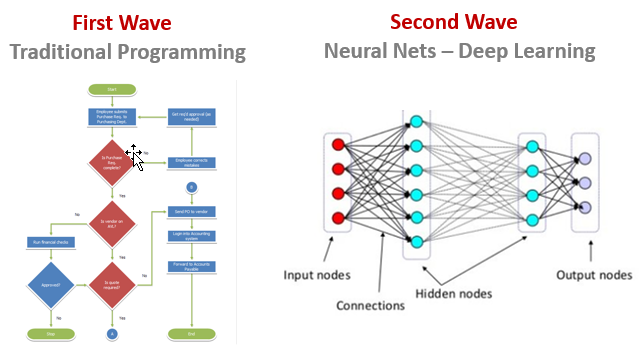

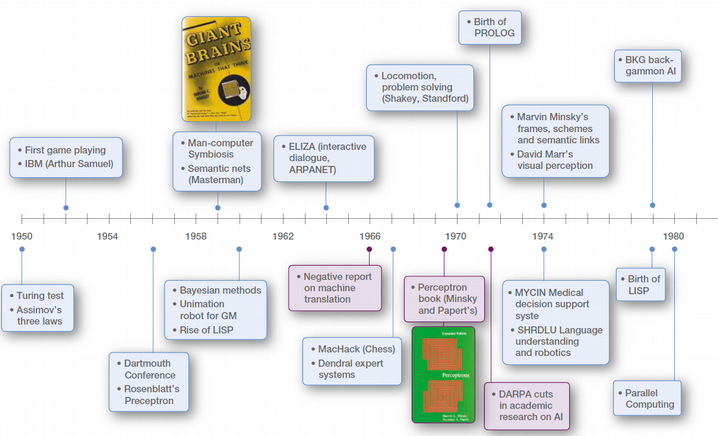

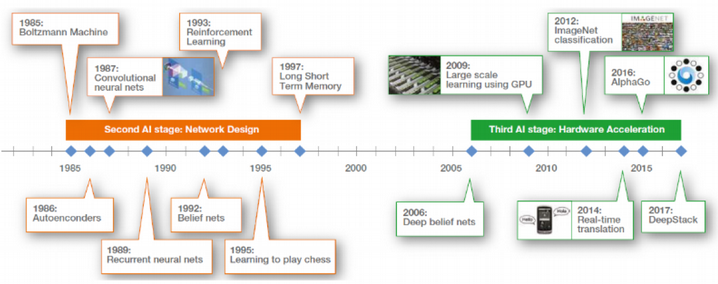

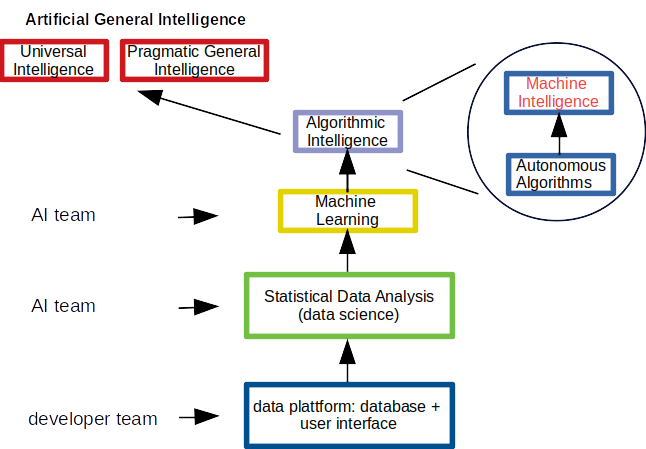

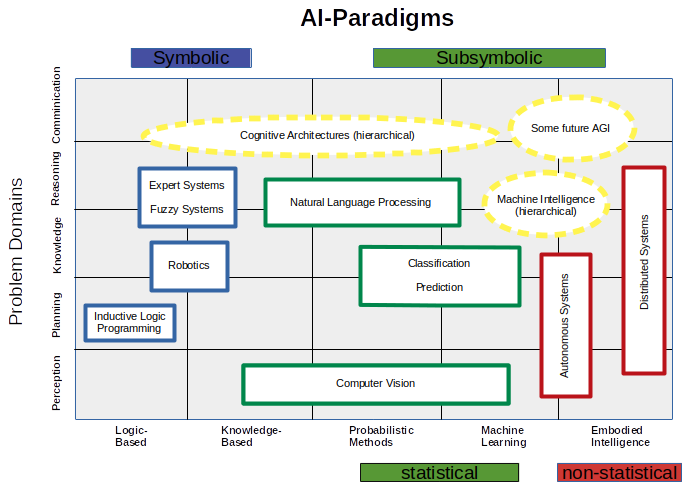

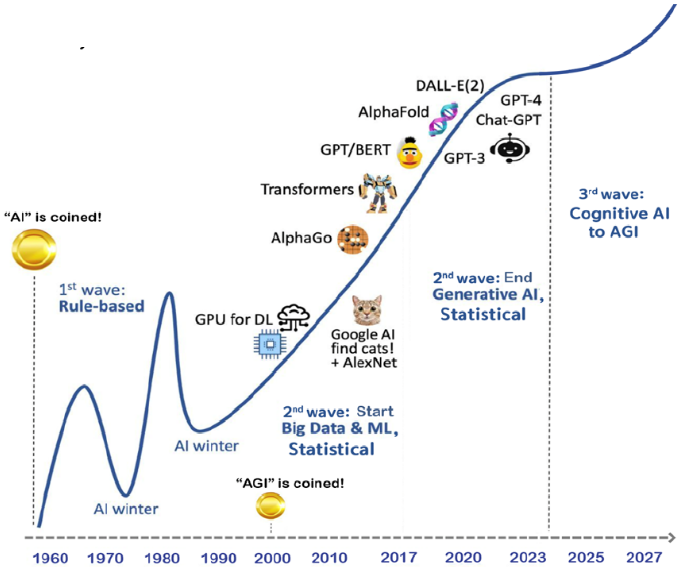

What does it mean to talk about AI, if it is realized by algorithms i.e. sequences of assignments and statements? Historically one can separate three developmental states of AI-maturity: Handcrafted Knowledge, Statistical Learning and Contextual Adaptation. Statistical Learning Theory is state of the art (SOTA) and not fully exhausted. Contextual adaptation or Cognitive AI realized by cognitive architectures or Neuro-symbolic AI might be the future of algorithmic intelligence. Let us dive a little bit deeper into the historical sequence of AI paradigms.

I. The Paradigm of Handcrafted Knowledge

This approach started around 1992 after the second AI-winter from 1980-1986. A good example for this GOFAI-approach (Good Old-Fashioned AI) is the DARPA Autonomous Vehicle Grand Challenge and can be characterized as follows:

- Engineers create sets of rules to represent knowledge in well-defined domains.

- The structure of the encoded knowledge is defined by humans. Only the specifics are explored by a machine.

- Reasoning over narrowly defined problems, no learning capability and poor handling of uncertainties in data.

- there is a symbol processing engine

- compositionality holds in the sense that the structure of expressions indicates how they should be interpreted.

- and there are recursive knowledge structures.

II. The Paradigm of Statistical Learning

After the second AI-winter an ice age for AI started from 1996 to 2006. It was caused by the priority of the mathematicians of developing non-parametric statistics, where statistics lost its distributional assumptions and met first order optimization. This move allowed to develop the current statistical i.e. data-based or subsymbolic approach to AI. A good example are the recognition of handwritten digits. Let us characterise that paradigm as follwos:

- Engineers create statistical models for specific problem domains and train them on big data.

- Using the manifold hypothesis: natural data forms lower dimensional structures (manifolds) in the embedding space, where each manifold represents a different entity. Understanding data comes by separating the manifolds.

- However, there are only nuanced classification and prediction capabilities, no contextual capability and minimal reasoning ability. There are statistically impressive results, but individually unreliable.

The key assumptions here are: according to the subsymbolic theory information is parallelly processed by simple calculations realized by neurons. In this approach information is represented by a simple sequence pulses. Subsymbolic models are based on a metaphor human brain, where cognitive activities of brain are interpreted by theoretical concepts that have their origin in neuroscience. Hence:

- Knowledge is not represented in symbolic form.

- The program control is typically represented by large number of numeric parameters.

- These numeric parameters may collectively define some behaviour (a mapping from input senses to output actions) or a pattern classifier (e.g. handwriting recognition).

- Numeric parameters then modified by large number of interactions with the world (e.g. the real world, the problem, a software world) until the program works.

- It is notoriously difficult to determine these parameter from the math theory.

This paradigm - also called the weak statistical narrow AI - is currently dominating. Today's most dominant problems of weak statistical narrow AI are:

- Manage complexity of decisions: via multi-agent systems

- Learning control from perception of environment: via deep reinforcement learning

- Master non-monotonic conclusions: via case base reasoning or probabilistic reasoning on graphs

- Robust recognition of pattern in space and time: via deep learning

- Multi-modality: via synthetic data and contrastive learning

The corresponding scope of today's main applications can be described as follows:

- Natural Language Processing (NLP/NLU) to enable communication

- Representation of human knowledge development

- Automated reasoning to use the stored information to answer questions and to draw new conclusions

- Machine learning to adapt to new circumstances and to detect and extrapolate patterns

- Computer vision to perceive the objects

- Robotics to manipulate objects

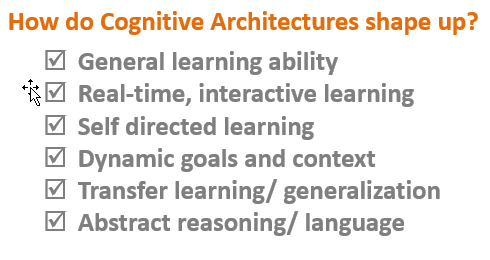

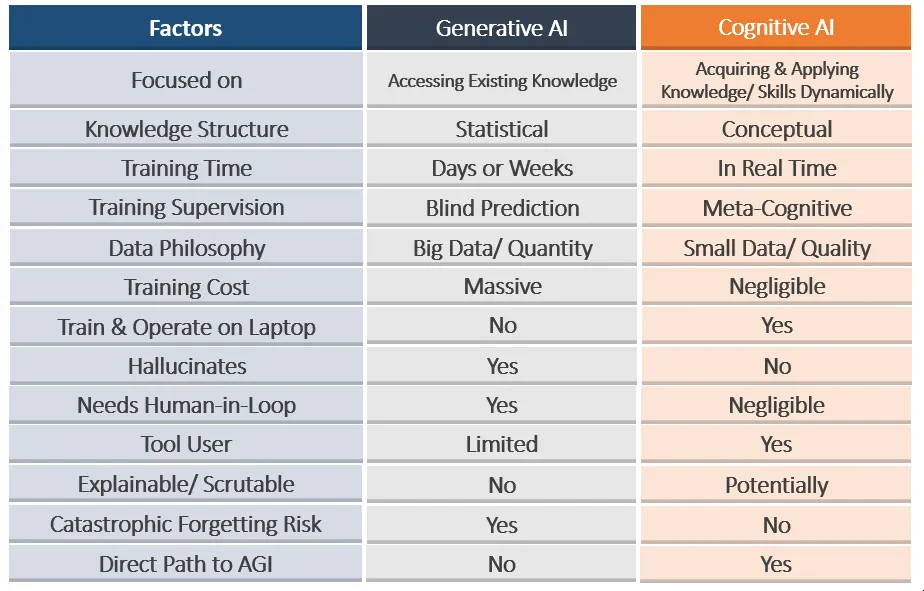

III. The Upcoming Paradigm of Cognitive AI

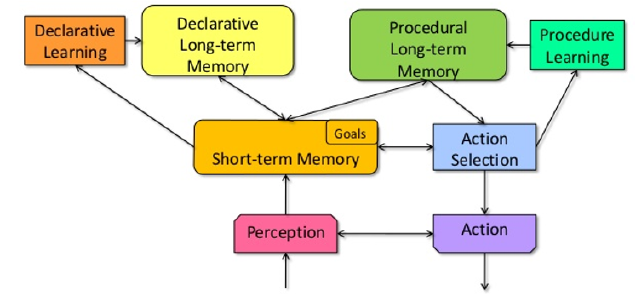

Most cognitive architectures developed in the past are highly modular, utilizing, for example, distinct modules for short-term memory (STM), long-term memory (LTM), parsing, inference, planning, etc.. Moreover, usually many of these functional modules were designed by separated teams without much (if any) overall coordination. This is a serious limitation: they tend not to share a uniform multi-modal data representation or design, making it nearly impossible for cognitive functions to synergistically support each other in real time. A much better, indeed essential, approach is to have a highly integrated system that allows for all functions to interact seamlessly - a cognitive architecture.

In the paradigm of Cognitive AI one possible future of narrow AI can be charactarized as follows:

- Generative models e.g. realized by general adversarial networks construct contextual explanations based on multi-modal data for classes of real world phenomena. This may be called contextual adaptation.

- Fallible algorithms become capable of non-inductive and non-monotonic reasoning as well as direct pattern recognition. First approaches were made in this field based on sequential RNN.

- As a first form of AGI algorithms will start to understand using self-supervision in constructing models.

However we also know, that there are some highly desirable features of AI-algorithms still missing until now:

- At this point we talk about a general and robust learning ability beyond pursuing goals, predicting the occluded from the visible, interactively learning, self-correction and transfer learning on the level of problems, abstract and counterfactual reasoning and dynamical adaptation of goals to a changing context.

The upper figure shows a task segmentation in cognitive architectures based on the key concepts memory, grounding i.e. testing and data collection, learning, and decision making.

In that sense in Cognitive AI explicit programmed structures, limited to some domain, that are not learned from data, are used to generate and change an internal informational state of the algorithm, that is essential to accomplish some cognitive task, while the whole process is driven by empirical data. There is hope that such cognitive architecture will allow to shrink the inevitable learning period from decades needed by humans to weeks.

- Recall that the idea of cognitive architectures is not new. However the variety of their algorithmic realizations is still an open research field, that the leading BigTech-companies - except IBM - have been avoided until now. This last point will become crucial for every data-driven startup because it means that a scientific omission coincides with an economic opportunity.

A Strategic Look on the Current Subsymbolic Paradigm of Statistical Learning

There is no doubt that most contributions to algorithmic intelligence have not progressed beyond the stage of engineering and consistent mathematical theories to justify these numerical algorithms are hardly available. Famous exceptions are e.g. the Statistical Learning Theory and the Information Bottleneck Theory of Deep Learning.

There is still a big difference between the expectations, which are - at least partly - supported also by the AI community, and the achievements shown so far. In particular, no algorithm currently gets beyond weak AI. This leads us to the following current approaches to algorithmic intelligence in the statistical paradigm of weak AI:

AI as a successful Pursuit of Goals

Let's take stock of developments over the last 30 years: the result is sobering. Since the mostly used success metric of the field of weak AI has been to achieve specific observable skills algorithmically, the historic development of AI has nothing to do with a series of inventions realizing a highly adaptable, on-the-fly behavior generation engine for non-repeating tasks under changing conditions, not on the level of a species, but on the individual level. AI, as we have pursued it so far, is more about how to solve these tasks by algorithms without featuring any form of non-human intelligence, because for a specific task this is much easier than solving the general problem of intelligence. One example for this is the detecting of complex dependencies in big data by deep learning that in fact seems to be reducable to stochastic function approximation by non-smooth first order optimization - which is the core idea behind deep learning.

AI in the current paradigm of statistical learning is more profitable also: If you fix the task to be solved before the start of the algorith,, you remove the need to handle uncertainty and novelty in the data the algorithm learns from, that are the bottlenecks in every AI approach. And since the nature of intelligence is the ability to handle novelty and uncertainty, by that ironically you are effectively removing the need for intelligence. Unsurprisingly the focus on achieving task-specific performance while placing no conditions on how these algorithmic system arrives at this performance has led to data-driven systems that, despite performing the target tasks quite well, largely do not feature the sort of human intelligence that the field of AI set out to build in its beginning. By that we are forced to conclude, that if intelligence lies in the process of acquiring skills, then there is no task X such that skills at X demonstrates intelligence, unless X is actually a meta-task involving skill-acquisition across a broad range of tasks. So for AI systems to reach their full potential, we should develop quantitative methods to measure the performance of algorithms on standardized tasks and to compare them with human cognition. As a consequence there is no simple take home message about the nature or the possible future of algorithmic intelligence on the basis of the work that is already completed.

Finally taking seriously the insights in algorithmic intelligence from the last paragraphs and summarizing the research activities that are detectable in the publications over the last decades on a coarse-grained level, we come up with a map of topics that illustrates the state of the art in AI:

In the upper figure ellow dotted lines mark cutting edge research areas. The other topics are more or less already established - at least on an engineering level.

In sum in terms of time the growing capabilities of AI systems seem to have the following evolutionary dynamics:

Limitations of Current Weak Narrow AI

However there is obviously a long way to go: Human intelligence is the cognitive ability to understand the world, to achieve a wide variety of novel goal and to autonomously integrate, adapt and re-use such knowledge and skills via ongoing incremental learning with limited resources. What sets human intelligence apart is our unique ability to recursively form abstract concepts on top of existing abstractions. This core feature is also the key to both our ability to learn language and to our relatively high level of self-awareness.Hence we come up with an imcomplete list of general problems of the statistical learning paradign to subsymbolic AI:

- Even if algorithms think, there is no consciousness, nor feelings nor love.

- Cognition of algorithms is not inspired, adapted or guided by any sort of embodied cognition.

- Algorithms are statisticans, i.e. direct pattern recognition is still not realized. Recall that solving this problem would require a complete new cognitive architecture.

- Currently algorithms learn by induction, i.e. there is no sort of non-monotonic or non-truthfunctional, e.g. counterfactual reasoning from empirical laws, no learning from thought experiments.

- Algorithms do not learn without explicitly supervision or implicit given guidance, there is no autonomous transfer nor any sort of creativity.

- There is no meta-cognition, no obverserving of algorithm's own thinking, correcting or tailoring its own thoughts to the problem.

- There is no artifical consciousness needed for self-improvment of programs.

- There are no recipies to handle incomplete or inconsistent knowledge or dealing with different types of information e.g. semantic, numeric in different AI-techniques.

- There is no independence from e.g. statistical assumptions, i.e. lack of generalizability of algorithms.

- Each narrow application needs to be specially, and there is no learning incrementally or interactively, in real time.

And as long as the SOTA of algorithmic intelligence has not coped with all of these challenges, no point of sinigularity is near and AI should only be considered as a chance, but as a threat.

0 Comments

Amandeep Singh Arora

Amandeep Singh Arora is detail-oriented statistician leveraging data analysis and modeling expertise to drive informed decision-making. He holds Masters degree in Statistics and bachelor's in Psychology from the University of Jammu. He is specialise in Statistical Analysis, Data Visualization and Machine Learning. His Academic foundation in psychology provides a unique understanding of human behavior, while his advanced degree in statistics equips him with the technical skills to analyze and interpret complex data in predictive modelling.

Search

Recent Posts

Rapid Prototyping

Algorithmic Stupidity

The Maps of AI

Tags